So yet again on the web I've seen posts by journalists that 1080p

is worthwhile, and that developers should be spending all their time trying to

make full 1080p/60fps games, and yet again, it gets my back up...

A few years back I

did a post about the mass market, which was an article I did way back in 2004

( A

new direction in gaming), and it seems to me this is the same thing, coming

up again. I mean, seriously, who do you want to buy your games, a handful

of journalists, or millions of gamers?

Making a full

1080p/60fps game can be hard - depending on the game of course, but ask

professional developers just how much time and effort they spend making a game

run at 60fps, while keeping the detail level up, and they'll tell you it's

incredibly tricky. You end up leaving a lot of performance on the table because

you can't let busy sections - explosions, multiplayer, or just a load of baddies

appearing at the wrong time, slow down the game even a fraction, because

everyone - no matter who they are, will notice a stutter in movement and

gameplay. And that's the crux of the problem. Even a simple driving game, where

it's all pretty much on rails, so you know poly counts and the like, has to

leave a lot on the table, otherwise if there is a pileup, and there are more

cars, particles and debris spread around that you thought there would be, the

it'll all slow down, and will look utterly horrible.

Take a simple

example. You’re in a race with 5 other cars, cars are in single file, spread

out over the track - as the developer expected it to be. You’re in the lead

with a clear track. So let’s say the developer had accounted for this, and are

using 90% of the machines power for most of the track, and on certain areas,

they reduce this to account for more cars and effects - like the start line for

example. But suddenly, you lose control, and spin, the car crashes and bits are

spread everywhere along with lots of particles. Now the other cars catch up,

hit you, and it gets messy. Suddenly, that 10% spare just wasn't enough. it

needs a good 30-40% to account for particle mayhem, and damage, and the game

slows down. As a gamer, it’s not dropped from 60 to 30 - or perhaps even lower

depending on how many cars are in the race (like an F1 race for example). Now,

30fps isn't terrible, and even 20fps would be fine - probably, but the thing

is.... the player has experienced the 60fps responsiveness and now its suddenly

not handling the same way, and they notice.

The problem is

people notice change, even if they don't understand the technical effects or

reasons behind it. So going from 60 to 30 will be noticed by everyone, even

when you compensate for it. It is much harder to notice going from 30 to 20

when there is frame rate correction going on, but many can still "feel

it".

So, if I'm saying

you shouldn't do 60fps or full 1080p (if the game struggles to handle it), what

should you do? Well, what people really notice, isn't smooth graphics, but

smooth input. Games have to be responsive, and it's the lag on controls that

everyone really notices. If you move left, and it takes a few frames for that

to register - everyone cares, not just hard core gamers. But if a game responds

smoothly, then if you’re at 60 or 30 - or even 20! then gamers are much more

forgiving. This is mainly because once you're in the thick of the action, they

are concentrating on the game, not the pretty graphics or effects. I can prove

this too, even to hard core developers/gamers. Years ago when projectors

first came out they were made with reasonably low res LCD panels, and you got

what was called the "Screen Door" effect. Pixels - when projected,

didn't sit right next to each other, they were spaces between them. Now when

you started a film, you'd see this and you would end up seeing nothing BUT the

spaces. However as soon as you got into watching and more importantly, enjoying

the film, that went away, you never saw the flaws in the display, because you

were concentrating on the content.

The same is true

of games, sure you power up a game and ooo...look, it’s super high res, and

ever so smooth! But 10 minutes later, your deep in the game and couldn't give a

crap about the extra pixels, or slightly smoother game, all you care about is

the responsiveness of it all. If it moves when you tell it to, your happy.

So what about the

1080p aspect? Well years ago when 1080p started to become the norm, and shops

had both 720p and 1080p large screen TVs in store, I happened to be in one,

where they had the same model, but one 1080p, and on 720p hanging right next to

each other, playing the same demo. I went right up to them, and could still

hardly tell the difference. These were 2 50" displays, yet with my noise

almost touching them, it was hard to tell where the extra pixels were. Now, if

you have a 1080p projector, and are viewing on a 2 to 3-meter-wide screen, I'm

pretty sure you'll notice, but on a TV? when your running/driving at high speed

- no chance. Every article about resolution in games shows stills, and this is

the worst thing to use, as that's not how you play games. It's also worth

remembering, that at that moment in time your noting playing, so you’re not

concentrating on the game, you’re doing nothing but search for extra pixels, so

it's yet another con really.

So what’s the

benefit to running slower, at a slightly reduced resolution? Well, 1080p at

30fps means you can draw at least twice as much. That's a LOT of extra graphics

you can suddenly draw, and even if you don't need/want to draw that much more,

if makes your coding life MUCH simpler. You no longer have to struggle to

maintain the frame rate, or worry about when there is a sudden increase in

particles or damage - or just more characters on screen.

What about

resolution? Well 720p is still really high-res. There are still folk getting HD

TV's and watching standard definition TV thinking it's so much sharper than it

used to be! The mass market usually doesn't "get" what higher res is

until they see it side by side, and once things are moving and they are

concentrating on other things, they will neither know, or care.

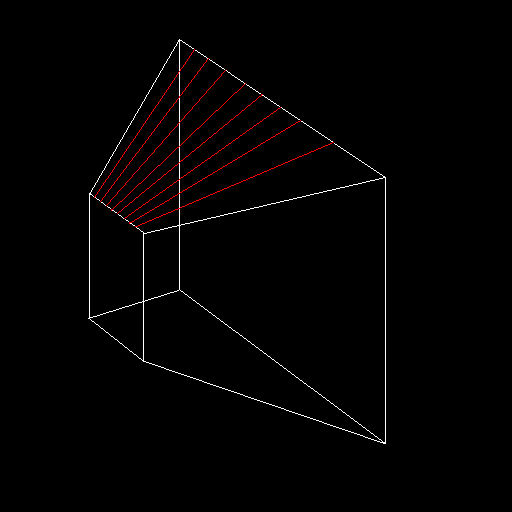

At 720p, 30fps you

can draw over 4 times as much 1080p, 60fps. That's a LOT of extra time and

drawing. Imagine how much more detailed you could make a scene with that amount

of extra geometry or pixel throughput. Even at 3 times, you could leave yourself

a massive amount of spare bandwidth to handle effects and keep gameplay smooth.

2D games

"probably" won't suffer too much from this problem, although on

mobile web or consoles like the OUYA they will as the chips/tech themselves

just isn't very quick.

So rather than

spend all your time with tech and struggle to maintain a frame rate most gamers

won't notice, shouldn't you spend all your time making a great game instead? If

you're depending on pretty pixels to make your game enjoyable, it probably

isn't a great game to start with, and you should really fix that.

Games with pretty

pixels sell a few copies, truly great games sell far more, and while it's true

that games with both will sell even more, the first rule of game development is

that only games you release will sell anything at all, and while playing with

tech is great fun, constant playing/tuning doesn't ship the game.